Feb. 13, 2015 Perspectives Engineering

Powering science

As powerful machines approach speeds in the quadrillions of arithmetic calculations per second, supercomputers will bring certainty and speed to scientific predictions.

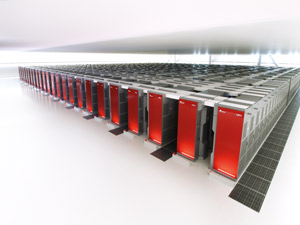

Figure 1: Powerful supercomputers like the K computer developed at the RIKEN Advanced Institute for Computational Science are essential for scientific discovery and prediction. © 2015 RIKEN

Figure 1: Powerful supercomputers like the K computer developed at the RIKEN Advanced Institute for Computational Science are essential for scientific discovery and prediction. © 2015 RIKEN

The world’s first supercomputer, Cray-1A, was designed and manufactured in 1976 by the US-based company Cray Research. Capable of conducting over 100 million floating-point operations per second (flops) with occasional calculation bursts of 250 megaflops, Cray-1A was used to generate the first ten-day weather forecasts at a spatial resolution of 200 kilometers and to model airflow over the surfaces of airplane wings to identify hot spots of aerodynamic drag.

In the four decades since then, the speed of supercomputers has accelerated dramatically, to the point that it is now over a hundred million times faster than Cray-1A. Thanks to these rapid advances, supercomputers have been central to numerous scientific breakthroughs, improvements to industrial efficiency and solutions for pressing societal problems. From disaster mitigation to climate resilience, alternative energy, healthcare and global security, supercomputers have become indispensable to science, technology and development. For example, supercomputers sifted through streams of data to prove the existence of the Higgs boson, which won François Englert and Peter Higgs the Nobel Prize in Physics in 2013. That same year, three researchers were awarded the Nobel Prize in Chemistry for developing multi-scale models using supercomputers to reveal complex chemical processes. As the Nobel Prize website notes, “The computer is just as important a tool for chemists as the test tube.” Sophisticated simulations have also been crucial for recognizing the causes and consequences of global warming, with increasingly finer strokes followed over longer time frames.

Today, supercomputer architectures have drastically changed and now often comprise expansive networks of tens of thousands of simultaneously operating processors. These workhorses often have to mine through large databases to address one specific scientific question. In this scenario, the speed at which processors communicate with each other, access short-term memory and long-term data storage, and compute irregular number patterns is just as important as their efficiency at solving linear equations. As a result, top supercomputers are now evaluated not only on calculation speed but on their appropriateness as a tool for science. So in addition to the Linpack benchmark, which assesses how long a supercomputer takes to churn through a system of linear equations, new benchmarks such as the High Performance Conjugate Gradient (HPCG) and Graph 500 are now used to compare the performances of supercomputers. The adoption of these new benchmarks is encouraging the design of supercomputers that better serve real-world needs.

Introducing K

In 2011, the RIKEN Advanced Institute for Computational Science (AICS) revealed a supercomputer that fulfilled more than the promise of speed. Not only did the K computer, developed in partnership with Fujitsu, cross the long-sought goal of ten petaflops for the first time, but it also took first place in the big-data ranking Graph 500 in June 2014 and second position in the HPCG assessment in November 2014. The architecture of the K computer ensures a balance between processing speed, data storage, memory and communication.

Importantly, the K computer is also exceptionally stable. Its more than 80,000 processors can continuously run for over 29 hours and the system operates at 80 per cent efficiency for more than 300 days a year without unexpected failures or routine maintenance. Half of its capacity is used to process information for strategic projects of national interest, including in the areas of drug discovery, new materials and energy production, nanoscience, disaster risk reduction and prevention, industrial innovation, and the origins of the Universe. Another 30 per cent is open for public use, with over 100 companies accessing its services in the last two years. The K computer is fast, user friendly, reliable and well adapted to the study of complex scientific phenomena.

The AICS has achieved these feats through using a few novel concepts and techniques. Crucial among them was the institutional merging of computer science (the design of actual computers) with computational science (the development of applications that employ advanced computers). At the AICS, researchers in the divisions of computer science and computational science work side by side.

The K computer also uses a customized network called Tofu (Torus fusion), a six-dimensional grid connection through which the over 80,000 processors, or ‘nodes’, communicate with each. Each node is assigned to a project, or ‘job’, based on the most spatially efficient allocation involving short and quick exchanges between nodes. Tofu represents a shift away from earlier networks that used hierarchical systems to connect nodes. Furthermore, to improve the stability of the system, the AICS dropped the operating temperature by an astounding 50 degrees Celsius by switching from air cooling to a system that employs both air and water.

Zooming in

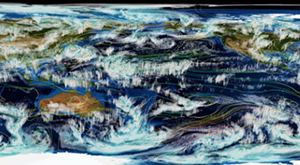

Figure 2: Researchers at JAMSTEC, AORI at the University of Tokyo and the RIKEN AICS used the K computer to simulate the global cloud distribution with an atmospheric model of 870-meter resolution. Ryuji Yoshida at the AICS Computational Climate Science Research Team visualized the simulation. © 2015 RIKEN

Figure 2: Researchers at JAMSTEC, AORI at the University of Tokyo and the RIKEN AICS used the K computer to simulate the global cloud distribution with an atmospheric model of 870-meter resolution. Ryuji Yoshida at the AICS Computational Climate Science Research Team visualized the simulation. © 2015 RIKEN

Since its launch, the K computer has been used by many research groups to simulate physical phenomena more accurately than previously possible. These projects would have been difficult, and sometimes even impossible, to achieve without the help of the K computer.

In 2014, a team from Nagoya University reconstructed the nanometer-sized protein shell of a poliovirus floating in water by simulating the movement and interaction of 6.5 million individual atoms1. Later in the year, RIKEN collaborated with the University of Tokyo and Fujitsu to simulate the pumping mechanism of the heart2. To do this, the K computer took two days to recreate 170,000 tetrahedrons representing the individual muscle fibers of heart tissue, integrating information from physics, engineering, medicine and physiology. The simulation shows how blood flows from the lungs, through the chambers in the heart, and back out to the body. The program has been used to model the hearts of real patients and predict the outcome of various treatment options to improve surgery success rates.

Another group of scientists studied a new concept of electrolyte for lithium-ion batteries called lithium-salt superconcentrated solution. They used the K computer to elucidate the unusual electrochemical stability and ionic transport of the electrolyte at the atomic scale. Such novel electrolytes are expected to enable the use of high voltage lithium-ion batteries with fast charging time3.

And based on a simulation of the three-dimensional structure of three cancer proteins, a further research group has discovered over 10 potential leads for new anticancer drugs that are currently being preclinically tested4.

The K computer has also far exceeded the Cray-1A’s weather outlooks. While the older computer could provide projections at a resolution of 200 kilometers, the K computer can zoom in on 870-meter resolution imagery to follow the dense, towering cumulonimbus clouds that bring thunderstorms (see image)5, as well as predict the onset and behavior of a massive atmospheric disturbance known as the Madden–Julian Oscillation over a period of a month6. The oscillation is responsible for heavy rainfall that could lead to cyclones and heat and cold waves in tropical regions.

Tipping point

The supercomputers of today, however, have not advanced enough to simulate natural phenomena with the speed and accuracy needed to meet all of our scientific, environmental and social demands—much faster and more responsive machines are needed. The AICS and Fujitsu have partnered once more to construct a computer that will take the science of prediction into uncharted territories. The major national project is supported by JPY 110 billion in funding from the Japanese government and targeted for completion by 2020.

The post-K supercomputer will be designed to address important unresolved problems in science and engineering. In building its core framework, the AICS plans to use state-of-the-art Japanese technologies and will collaborate with international partners to develop standardized software that can be applied across a global spectrum of research for increased user convenience. The supercomputer will inherit the K computer’s parallel processing structure and Tofu network to provide a scalable, easy-to-use computational resource.

A system of such capacity will enable researchers to simulate solutions to many existing and emerging societal problems. For example, by processing large volumes of satellite and radar data, it may be possible to warn city residents of imminent outbursts of torrential ‘guerilla rain’, which can lead to flash floods that cause significant loss of life and damage to property.

Scientists now consider simulation to be the third pillar of scientific discovery, after theory and experiment. But to power science toward the tipping point where description turns into prediction, researchers of all breeds—computer, computational and specialized subject scientists—will have to co-design a supercomputer suited to their needs. The AICS is offering them an opportunity to do that.

References

- 1. Yoshii, N., Andoh, A., Fujimoto, K., Kojima, H., Yamada, A. & Okazaki, S. MODYLAS: A highly parallelized general-purpose molecular dynamics simulation program. International Journal of Quantum Chemistry 115, 342–348 (2015). doi: 10.1002/qua.24841

- 2. Washio, T., Okada, J., Takahashi, A., Yoneda, K., Kadooka, Y., Sugiura, S. & Hisada, T. Multiscale heart simulation with cooperative stochastic cross-bridge dynamics and cellular structures. Multiscale Modeling & Simulation 11, 965–999 (2013). doi: 10.1137/120892866

- 3. Yamada, Y., Furukawa, K., Sodeyama, K., Kikuchi, K., Yaegashi, M., Tateyama, Y. & Yamada, A. Unusual stability of acetonitrile-based superconcentrated electrolytes for fast-charging lithium-ion batteries. Journal of the American Chemical Society 136, 5039–5046 (2014). doi: 10.1021/ja412807w

- 4. Yamashita, T., Ueda, A., Mitsui, T., Tomonaga, A., Matsumoto, S., Kodama, T. & Fujitani, H. Molecular dynamics simulation-based evaluation of the binding free energies of computationally designed drug candidates: Importance of the dynamical effects. Chemical and Pharmaceutical Bulletin 62, 661–667 (2014). doi: 10.1248/cpb.c14-00132

- 5. Miyamoto, M., Kajikawa, Y., Yoshida, R., Yamaura, T., Yashiro, H. & Tomita, H. Deep moist atmospheric convection in a subkilometer global simulation. Geophysical Research Letters 40, 4922–4926 (2013). doi: 10.1002/grl.50944

- 6.Miyakawa, T., Satoh, M., Miura, H., Tomita, H., Yashiro, H., Noda, A. T, Yamada, Y., Kodama, C., Kimoto, M. & Yoneyama, K. Madden–Julian Oscillation prediction skill of a new-generation global model demonstrated using a supercomputer. Nature Communications 5, 3769 (2014). doi: 10.1038/ncomms4769

About the Researcher

Kimihiko Hirao

Kimihiko Hirao has been the director of the RIKEN Advanced Institute for Computational Science since its establishment in 2010. He has authored more than 300 papers on the subject of theoretical chemistry, including significant theoretical contributions to computational quantum chemistry.