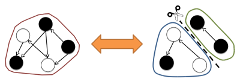

The difference between an ideal brain and a ‘disconnected’ model in which connections between neurons have been cut can be quantified in terms of ‘integrated information’. Image: Masafumi Oizumi, RIKEN Brain Science Institute

The difference between an ideal brain and a ‘disconnected’ model in which connections between neurons have been cut can be quantified in terms of ‘integrated information’. Image: Masafumi Oizumi, RIKEN Brain Science Institute

What if you could visualize consciousness as a geometrical pattern that shifts and morphs over time? A group of scientists think they can build such a 'consciousness meter' using complex mathematics, and they have just published their approach in the Proceedings of the National Academy of Sciences. They think that consciousness can be boiled down to the sum of information processing steps happening in the brain, and that if this can be measured and captured mathematically, we can arrive at an objective way to assess consciousness in anesthesia, locked-in individuals, and during sleep.

The current methods for differentiating conscious and unconscious states, for example in patients, are based on recordings of brain waves and observations of behavior, but "they can easily trick us," says Masafumi Oizumi. "Even in vegetative states there are many levels of consciousness, and some patients can show 'aware' activity while being scanned though their behavior seems 'unconscious'." Oizumi is a theoretical neuroscientist and post-doctoral researcher at Japan's RIKEN Brain Science Institute who, in collaboration with University of Wisconsin neuroscientist Giulio Tononi, has advanced 'information integration' as the hallmark of consciousness. Our experience of the world is unified: we aren't conscious of the separate sides of the visual field, for example. It is the brain's unification of information from senses, memories, motivations and actions that Oizumi and Tononi propose as defining what it is to be conscious. Being unconscious—during sleep or anesthesia, for example—is a breakdown of this integration that can be seen in certain dynamics of brain activation patterns. There is as yet, however, no objective, comprehensive way to assess when this breakdown occurs.

The idea that the conscious brain is integrating inputs is broadly accepted in brain science—Christof Koch, a pioneer in the field, called it "the only really promising fundamental theory of consciousness"—and this integration is thought to be a necessary part of consciousness. What is more radical, says Oizumi, is the contention that this is also sufficient: beyond integration, nothing else is needed for consciousness to arise. Making use of a branch of mathematics called information geometry, Oizumi and colleagues think that the 'integrated information theory' of consciousness (IIT) can not only make useful predictions but explain many existing phenomena in the science of consciousness.

Tononi once told The New York Times: “One out of two isn’t a lot of information, but if it’s one out of trillions, then there’s a lot.” He was referring to the binary states of computer transistor or photodiodes in cameras which, being either on-or-off, individually can't encode very much information compared to the 'trillions' of possible states the brain can have at any one moment. Considering the brain as an information processor shuffling bits is not a new approach, but according to IIT it's not the sheer volume of information itself that matters but how it's put together.

With information geometry, the vast number of brain states is treated like a multi-dimensional object. Though the resulting shape, called a manifold, is far more complex than a cube or sphere, applying geometrical techniques makes it possible to 'measure distances' within the manifold in much the same way you would measure the sides of a triangle. Oizumi, along with information geometry developer Shun'ichi Amari of RIKEN and Naotsugu Tsuchiya of Monash University in Australia, looked at the differences between an ideal densely connected brain and a 'disconnected' model in which connections between neurons had been cut. This procedure eliminated the integrated information in the model brain—in information space terms, it increased the distance from the disconnected model to the ideal. The shortest, most direct path between the 'disconnected' and ideal models of the brain was thus defined by the amount of integrated information.

Not everyone is convinced that this measure is such a simple route to explaining consciousness. As University of Sussex professor Anil Seth recently pointed out, this approach could spuriously ascribe consciousness to any instance of integrated information. Oizumi brushes off this concern: "A digital camera can process a lot of information, but no one thinks it is conscious. According to our theory, that's because there is no integration inside it, just non-interacting photodiodes that would continue to operate independently even if disconnected. The processing in the brain, on the other hand, is not independent, and would change drastically if you cut the connections. This is the difference that is being quantified" by the measure of integrated information called phi. Mathematically, this method also allows the researchers to discount correlations masquerading as integration that actually arise from noise or coincidence, so that you don't end up with 'conscious' cameras.

The idea that integration is the pillar of consciousness is also increasingly supported by more than just manipulation of theoretical bits. In experimental settings, a loss of consciousness is marked by a breakdown of communication between brain areas. Local pockets of activity can remain, but the big picture of integrated awareness is gone. In another study, Oizumi is applying the integrated information approach to the problem of conscious perception, in collaboration with Tsuchiya, Andrew Haun of the University of Wisconsin and neurosurgeons at the University of Iowa. There, they had neurosurgical patients look at faces on a screen while their brain electrical activity was recorded. The faces could be hidden from conscious awareness either by being flashed very quickly or with other masking stimuli that effectively caused the visual system to suppress what was seen. The patients reported what they saw in both normal and 'unconscious' states and their different percepts were reflected in the intracranial recordings.

The test of the integrated information theory came when the scientists visualized the patterns of information in the data from the brain recordings. When subjects reported consciously seeing a face, these patterns were integrated and very similar, regardless of what experimental condition the data came from. When a face was definitely presented, the patterns were completely distinct depending on whether the face was actually 'seen'. This implies, says Tsuchiya, that subjective conscious perception of the faces was closely related specifically to integrated information patterns. The authors think that this shows that there is a link between how information is structured and consciousness, and that this link can be observed experimentally, an important point for probing the contents and substrates of consciousness.

The more immediate utility of experiments like this is that they may help us develop better ways to assess consciousness. Current technologies to monitor the depth of anesthesia, such as the bispectral index, are effectively poorly understood black-boxes. In contrast, Oizumi suggests, the information theoretic approach could offer the possibility of automated determination of consciousness level from brain recordings. Such a tool could be applied across the spectrum of consciousness states, for example to minimize the amount of drugs used to induce anesthesia and unconsciousness.

This article, by Amanda Alvarez, originally appeared on Neurographic, licensed under CC BY 4.0.