Jul. 4, 2024 Research Highlight Biology

How the hippocampus records memories in stereo

Two pathways for storing broad, conceptual memories in the hippocampus in tandem with more-detailed memories have been identified

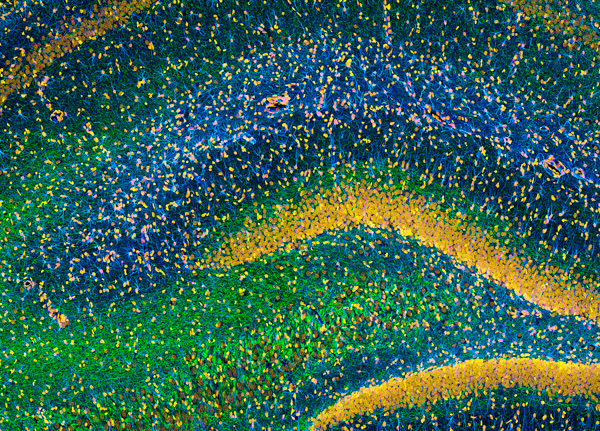

Figure 1: Light micrograph of a section through the hippocampus of a rat’s brain. Two RIKEN researchers have shown how the hippocampus records both detailed memories and more-conceptual ones. © THOMAS DEERINCK, NCMIR/SCIENCE PHOTO LIBRARY

The mechanism by which our brains record events in stereo—with one channel recording details and another recording general impressions—has been elicited by two RIKEN neuroscientists1,2.

Our memories have two aspects to them: they allow us to recall specific episodes from the past, but they also enable us to draw general concepts from them. For example, we can recall the specifics about a recent visit to see a friend, but we can also extract generalizations about their appearance and personality.

The brain region known as the hippocampus is responsible for both abilities, but just how it manages to do this has been unclear.

Louis Kang of the RIKEN Center for Brain Science (CBS) has long been interested in how memories are stored in the hippocampus. “The hippocampus has always fascinated me,” he says. “I think the origin of memories is quite mysterious, even now.”

Interestingly, the hippocampus receives two inputs: one in which experiences arrive directly from the brain region upstream from it; and another where it makes a detour through another brain region, where it undergoes processing.

Kang and Taro Toyoizumi, also of CBS, wondered whether the direct pathway that signals information with dense neural activity represents memories with general impressions, while the other one that does so with sparse activity represents all their details.

To test this conjecture, they used techniques from statistical physics (both researchers share a background in physics) to create a mathematical model of neural circuits in the hippocampus. The pair then tested the model using images of three items of clothing: sneakers, trousers and coats. They found that the first pathway stored the details of each image, while the second one generated general conceptual images of each kind of clothing.

At this stage, the model provided a plausible description of what could be happening in the hippocampus, but more evidence was needed to support it. “Our model proposed one possible explanation for how the brain remembers both example-like and concept-like memories,” says Kang. “But other explanations were also possible.”

To obtain further confirmation, the researchers tested their model using experimental recordings of the neurons in the mouse hippocampus that were freely available online. They noticed a signature generated by the model was also present in the recordings. “This supports the possibility that the brain behaves according to our model,” says Toyoizumi.

Finally, the two researchers applied the same approach to machine learning, so that neural networks represent information in both example-like and concept-like forms. This resulted in improved performance when distinguishing between similar images and identifying their common features. “This work shows how biological intelligence can inspire better algorithms for artificial intelligence,” notes Kang.

Louis Kang (right) and Taro Toyoizumi (left) have shown how two kinds of memories are stored in the hippocampus: more detailed, example-like memories and more general, concept-like memories. © 2024 RIKEN

Related content

- How left and right hippocampal CA1 regions in the mouse brain talk with each other

- How the hippocampus orchestrates memory consolidation

- Temporal representation in the hippocampus scales with duration

Rate this article

Reference

- 1. Kang, L. & Toyoizumi, T. Distinguishing examples while building concepts in hippocampal and artificial networks. Nature Communications 15, 647 (2024). doi: 10.1038/s41467-024-44877-0

- 2. Kang, L. & Toyoizumi, T. Hopfield-like network with complementary encodings of memories. Physical Review E 108, 054410 (2023). doi: 10.1103/PhysRevE.108.054410